Lynxius Setup

Begin your LLM evaluation with Lynxius in just one minute!

Signup for free here to create an account. Don't forget to validate your email!

Install Lynxius library

Install Lynxius Python library locally, or on your server.

pip install lynxius

Create Your First Project and API Key

Create your first project and create an API Key for it to start your LLM evaluations. Rememeber to store your secret key somewhere safe, you won't be able to access to its value anymore.

Use the newly generated secret key to set up your project API key locally, or on your server, to start your LLM evaluations.

export LYNXIUS_API_KEY='your-api-key-here'

export LYNXIUS_API_KEY='your-api-key-here'

set LYNXIUS_API_KEY "your-api-key-here"

Select Evaluation Setup

Remote vs Local Evaluations

The Lynxius remote evaluation setup is a fully managed service where the API keys for the models used in testing are provided and managed by the Lynxius team. Evaluation tasks are executed in the background, freeing you from delays and compute costs.

The Lynxius local evaluation setup is convenient if you prefer to use your OpenAI free credits and are comfortable managing the API keys for the models used in testing.

If you plan to run evaluations remotely, you can skip to Run Evals Remotely. If you prefer to run evaluations locally, make sure your OpenAI API key is set in your environment.

export OPENAI_API_KEY='your-api-key-here' # only needed to run evals locally

export OPENAI_API_KEY='your-api-key-here' # only needed to run evals locally

set OPENAI_API_KEY "your-api-key-here" :: only needed to run evals locally

Start Evaluating

Pick an evaluator from our library and run your evaluations!

from lynxius.client import LynxiusClient

from lynxius.evals.answer_correctness import AnswerCorrectness

# add tags for frontend filtering

label = "PR #111"

tags = ["GPT-4", "chat_pizza", "PROD", "Pizza-DB:v2"]

answer_correctness = AnswerCorrectness(label=label, tags=tags)

client = LynxiusClient()

answer_correctness.add_trace(

query="What is pizza quattro stagioni? Keep it short.",

# reference from Wikipedia (https://github.com/lynxius/lynxius-docs/blob/main/docs/public/images/quattro_stagioni_wikipedia_reference.png)

reference=(

"Pizza quattro stagioni ('four seasons pizza') is a variety of pizza "

"in Italian cuisine that is prepared in four sections with diverse "

"ingredients, with each section representing one season of the year. "

"Artichokes represent spring, tomatoes or basil represent summer, "

"mushrooms represent autumn and the ham, prosciutto or olives represent "

"winter."

),

# output from OpenAI GPT-4 (https://github.com/lynxius/lynxius-docs/blob/main/docs/public/images/quattro_stagioni_gpt4_output.png)

output=(

"Pizza Quattro Stagioni is an Italian pizza that represents the four "

"seasons through its toppings, divided into four sections. Each section "

"features ingredients typical of a particular season, like artichokes "

"for spring, peppers for summer, mushrooms for autumn, and olives or "

"prosciutto for winter."

)

)

client.evaluate(answer_correctness)

from lynxius.client import LynxiusClient

from lynxius.evals.answer_correctness import AnswerCorrectness

# add tags for frontend filtering

label = "PR #111"

tags = ["GPT-4", "chat_pizza", "PROD", "Pizza-DB:v2"]

answer_correctness = AnswerCorrectness(label=label, tags=tags)

client = LynxiusClient(run_local=True) # run evals locally

answer_correctness.add_trace(

query="What is pizza quattro stagioni? Keep it short.",

# reference from Wikipedia (https://github.com/lynxius/lynxius-docs/blob/main/docs/public/images/quattro_stagioni_wikipedia_reference.png)

reference=(

"Pizza quattro stagioni ('four seasons pizza') is a variety of pizza "

"in Italian cuisine that is prepared in four sections with diverse "

"ingredients, with each section representing one season of the year. "

"Artichokes represent spring, tomatoes or basil represent summer, "

"mushrooms represent autumn and the ham, prosciutto or olives represent "

"winter."

),

# output from OpenAI GPT-4 (https://github.com/lynxius/lynxius-docs/blob/main/docs/public/images/quattro_stagioni_gpt4_output.png)

output=(

"Pizza Quattro Stagioni is an Italian pizza that represents the four "

"seasons through its toppings, divided into four sections. Each section "

"features ingredients typical of a particular season, like artichokes "

"for spring, peppers for summer, mushrooms for autumn, and olives or "

"prosciutto for winter."

)

)

client.evaluate(answer_correctness)

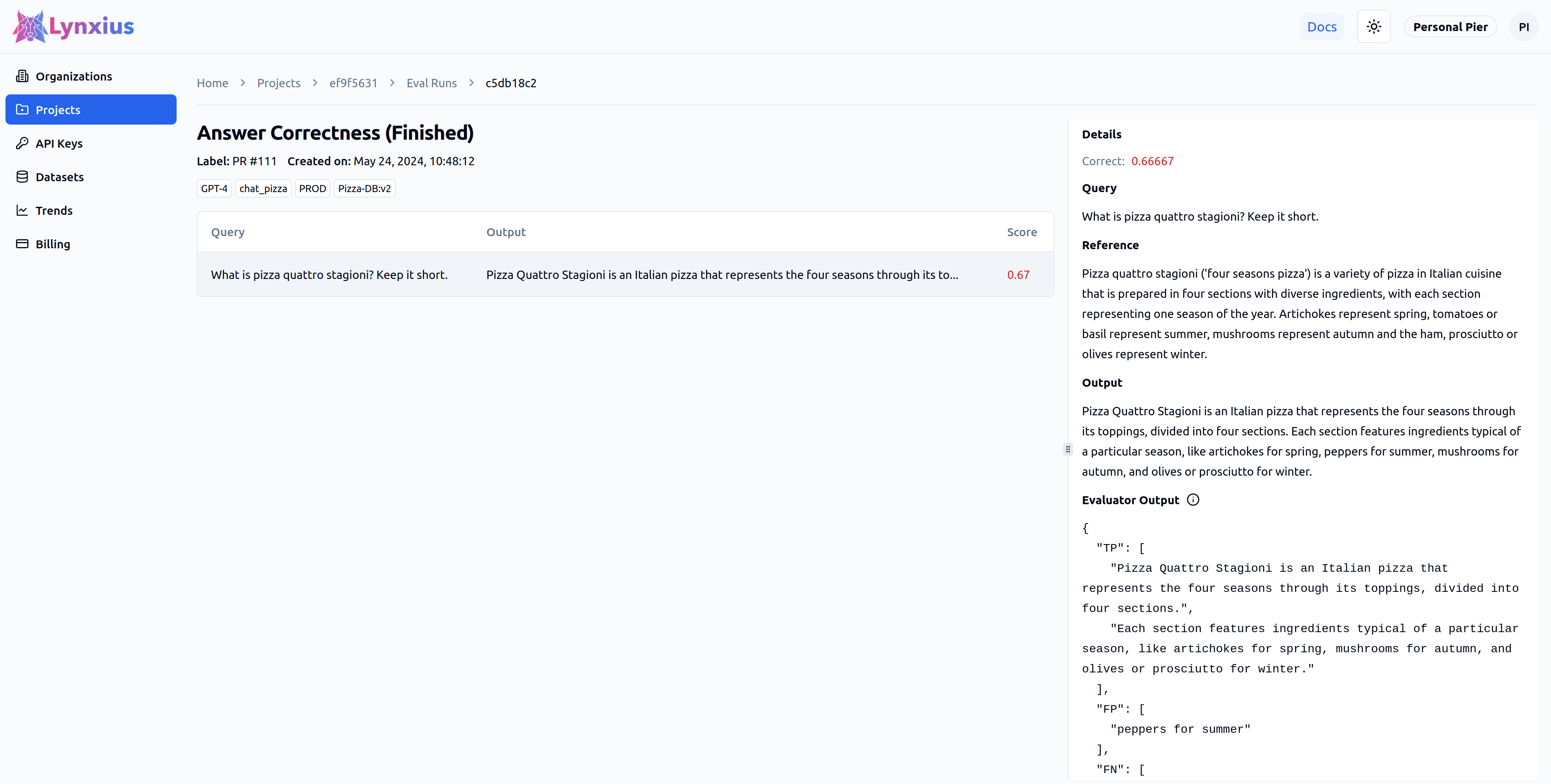

Click on the Eval Run link of your project to explore the output of your evaluation. Result UI Screenshot tab below shows the result on the UI, while the Result Values provides an explanation.

| Score | Value | Interpretation |

|---|---|---|

| Correct | 0.66667 | The output is only partially correct when compared to the reference. |

| Evaluator Output |

|

A good part of the tokens in the reference are similar to the output text. |