Lynxius

Lynxius LLM observability and evaluation platform accelerates your LLM Apps' time-to-market.

AI Engineers and Data Scientists can use Lynxius platform to easily monitor and evaluate LLM apps through all development phases—from experimentation to post-production. Critical metrics like answer correctness, BERTScore, answer completeness, factuality and context precision can be measured with enhanced precision, so that you can ship AI with confidence.

Lynxius streamlines collaboration, removing spreadsheets and simplifying how AI developers and non-technical Subject Matter Experts (SMEs) work together. Lynxius platform makes it easy for SMEs to grasp LLM evaluation metrics without knowing the math, boosting productivity and innovation.

Demo (3 min)

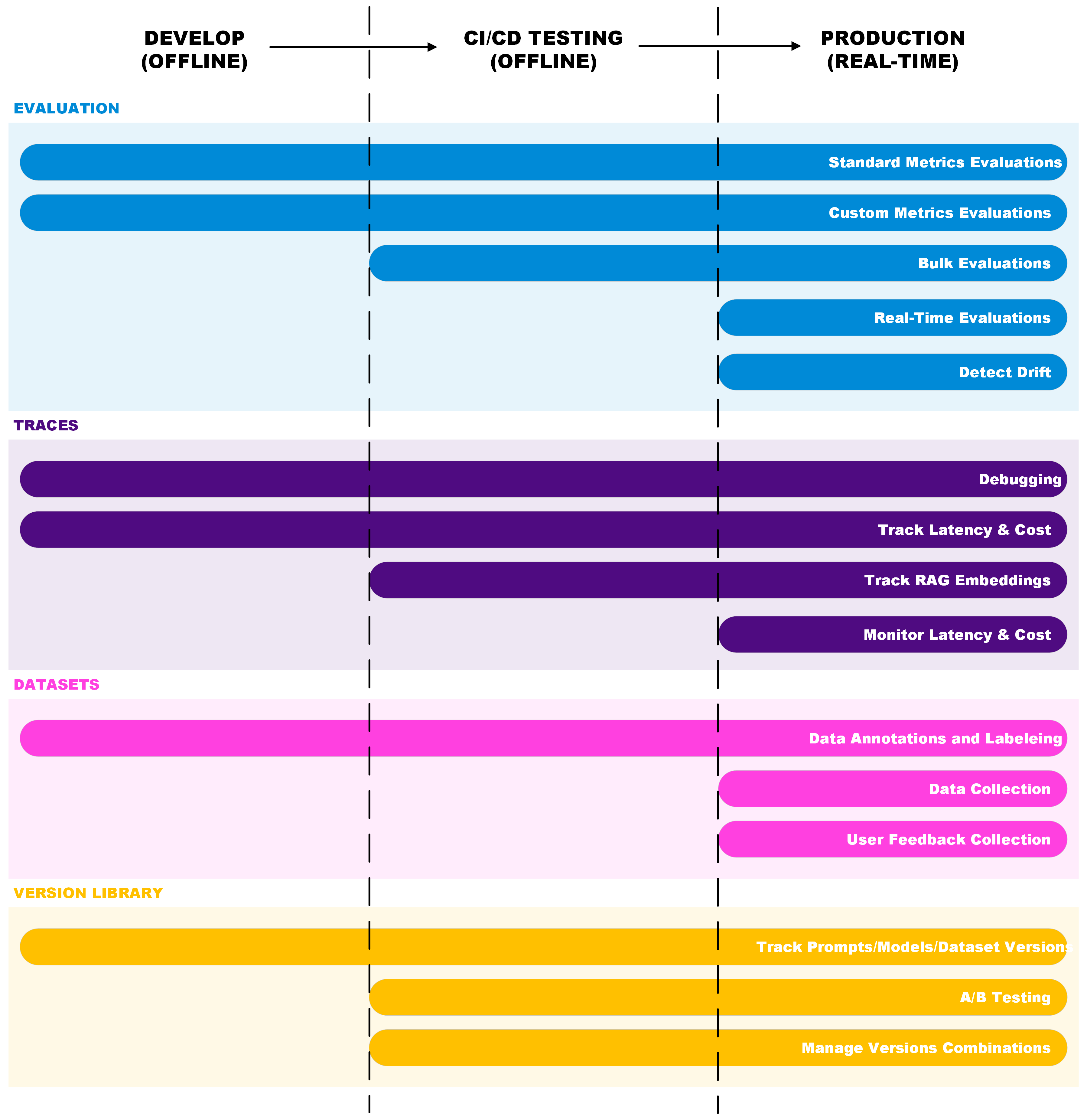

Supporting your Entire Development Journey

At Lynxius, we understand the distinct challenges of experimenting with an LLM App versus scaling it in production. Our platform supports your entire development journey—from initial development, to automated testing in a continuous integration environment, to deployment and post-production monitoring.

Quickstarts

-

Quickstart Evaluation

Evaluate the performance of your LLM App and ship it fast to market

-

Quickstart Tracing

Track and inspect each inner component of your LLM App and quickly identify bugs